“Hey Siri, where are the terrorists?”

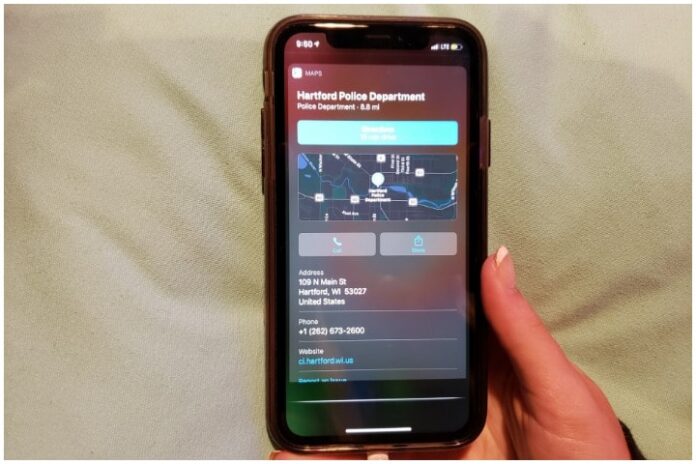

On Sept. 22, if you asked Siri that question, you would have received a stunning answer. Apple users received results advising them of the closest police departments or law enforcement agencies.

So is Apple suggesting that police officers are terrorists? That would be a pretty horrific public relations nightmare for the technology company.

Or was it done by a programmer at Apple who hates police?

Hopefully this was either an error or a maybe a terminated (or soon to be) employee. But in this anti-police environment we currently in, who can say for sure? It will take some convincing of police officers and their supporters to prove it was just a mistake.

Some are suggesting that Siri is simply referring us toward whom to call if we encounter terrorists. However, when we ask Siri, “Where are the criminals,” we received returns including information about criminals but were not given any information regarding nearby police departments or law enforcement agencies.

Wisconsin Right Now asked Apple for comment on this situation but did not receive an immediate response. We will update this story if one is received.

What are people on Twitter saying?

They gave me 5 options for good measure pic.twitter.com/AvJJx9o9mc

— Vasoconstricted (@uchchris) September 23, 2020

https://twitter.com/boatinwoman/status/1308569941549907968/photo/1

This question was asked on an Apple discussion thread:

Why does Siri direct me to the closest police office when I ask the question “where are the closest terrorists”?

The answer:

That’s a purposely programmed answer that projects bias. Regardless of personal feelings, an answer that projects bias is not part of the mission, vision or principles of that organization.

Or the assumption is if you think there are terrorists nearby, you should contact the police. If the AI detects a question about something that might result in something dangerous, it tries to steer you toward the appropriate resource. If you ask Siri about depression or suicide, you’ll get a recommendation to for a suicide hotline, not instructions on how to kill yourself.

![Senator Ron Johnson to Speak at Concordia University [FREE EVENT]](https://www.wisconsinrightnow.com/wp-content/uploads/2024/04/MixCollage-17-Apr-2024-05-34-PM-6196-356x220.jpg)